A couple of months ago I started annoying people by telling them, I’d like to show the Ubuntu Berlin community and c-base to Mark Shuttleworth as he is interested in community personally on one side and Ubuntu Berlin is a great example on the other. So this’s rather inviting an important member of the community than celebrating a „meet and greet“. Of course telling people plans like this makes them smile, but when you raise your finger and say „It will happen“ with certainty, they’ll get uncertain. So the plan was actually to invite Mark to one of our great traditional release parties which you shouldn’t miss when you are around Berlin at release time.

By chance the Ubuntu Developer Sprint happened to be in Berlin for the next Jaunty release this week. If I got it right, the Canonical Ubuntu developers meet five days around two weeks before a release feature freeze and work in groups and issues that need to be decided/designed or just fixed immediatly. The incredible Daniel Holbach had the idea of inviting the bunch of developers right into the c-base after their work. So he did and we scheduled it for an evening when the Ubuntu Berlin crew also meets at c-base for their monthly jour fix.

We did not announce this meeting externally as we tried to make the whole evening as comfortable as possible for everyone. And we did, I think. Right in time at 19:30 this Wednesday evening about thirty Ubuntu developers entered the c-base. Just among them Mark who seemed to like the whole c-base hackerspace, Ubuntu Berlin, community, space and future thing a lot. The Canonical crowd got several guided tours through the whole base by __t, while housetier provided the others with „German beer“ and Club Mate. We had a lot of chats in smaller groups, things, you always wanted to ask the developer of your choice and just relaxed smalltalks about space, canooeing the c-base project „OpenMoon“ (trying to send a rocket to the moon), and more. Mark seemed to be amazed about asking people why they joined to Ubuntu Berlin team, what they were currently doing and so on – so, what the community thing is about right here and right now.

We had no schedule for the evening. Therefore we spent about two and a half hour at c-base without any official part and a cosy diner in smaller groups afterwards. We only asked some people for an interview for the c-base statement studio channel where already people like Nokias Peter Schneider and Mozillas CEO John Lilly showed up. Mark took the time for an interview by jocognito. The results of this short talks are already online on Youtube:

Interview #1: (when the inbound video doesn’t show up, click here)

Interview #2: (when the inbound video doesn’t show up, click here)

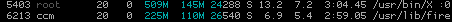

Actually I don’t know if I’ll really remember figures of dozens of machines, but hey: it’s just additional fun.

Actually I don’t know if I’ll really remember figures of dozens of machines, but hey: it’s just additional fun.