Backuppc is a neat server-based backup solution. In Linux envorinments it is often used in combination with rsync over ssh – and, let’s be hontest – often fairly lazy sudo or root rights for the rsync over ssh connection. This has a lot of disadvantages, but at least, you can use this setup as a cheap distributed shell, as a good maintained backuppc server might have access to a lot of your servers.

I wrote a small wrapper, that reads the (especially Debian/Ubuntu packaged) backuppc configuration and iterates through the hosts, allowing you to issue commands on every valid connection. I used it so far for listing used ssh keys, os patch levels and even small system manipulations.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | #!/bin/bash SSH_KEY="-i /var/lib/backuppc/.ssh/id_rsa" SSH_LOGINS=( `grep "root" /etc/backuppc/hosts | \ awk '{print "root@"$1" "}' | \ sed ':a;N;$!ba;s/\n//g'` ) for SSH_LOGIN in "${SSH_LOGINS[@]}" do HOST=`echo "${SSH_LOGIN}" | awk -F"@" '{print $2'}` echo "--------------------------------------------" echo "checking host: ${HOST}" ssh -C -qq -o "NumberOfPasswordPrompts=0" \ -o "PasswordAuthentication=no" ${SSH_KEY} ${SSH_LOGIN} "$1" done |

You can easily change this to your needs (e.g. changing login user, adding sudo and so on).

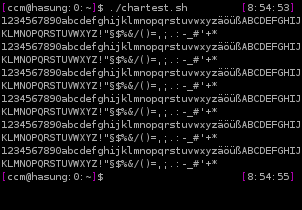

$ ./exec_remote_command.sh "date" -------------------------------------------- checking host: a.b.com Mo 9. Mai 15:40:26 CEST 2011 -------------------------------------------- checking host: b.b.com [...]

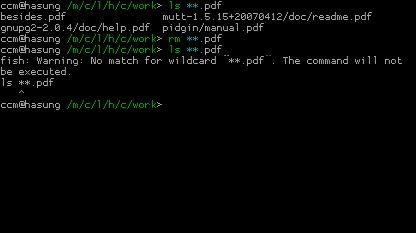

Make sure to quote your command, especially when using commands with options, so the script can handle the command line as one argument.

A younger sister of the script is the following ssh key checker that lists and sorts the ssh keys used on systems by their key comment (feel free to include the key itself):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | #!/bin/bash SSH_KEY="-i /var/lib/backuppc/.ssh/id_rsa" SSH_LOGINS=( `grep "root" /etc/backuppc/hosts | \ awk '{print "root@"$1" "}' | \ sed ':a;N;$!ba;s/\n//g'` ) for SSH_LOGIN in "${SSH_LOGINS[@]}" do HOST=`echo "${SSH_LOGIN}" | awk -F"@" '{print $2'}` echo "--------------------------------------------" echo "checking host: ${HOST}" ssh -C -qq -o "NumberOfPasswordPrompts=0" \ -o "PasswordAuthentication=no" ${SSH_KEY} ${SSH_LOGIN} \ "cut -d: -f6 /etc/passwd | xargs -i{} egrep -s \ '^ssh-' {}/.ssh/authorized_keys {}/.ssh/authorized_keys2" | \ cut -f 3- -d " " | sort ssh -C -qq -o "NumberOfPasswordPrompts=0" \ -o "PasswordAuthentication=no" ${SSH_KEY} ${SSH_LOGIN} \ "egrep -s '^ssh-' /etc/skel/.ssh/authorized_keys \ /etc/skel/.ssh/authorized_keys2" | cut -f 3- -d " " | sort done |

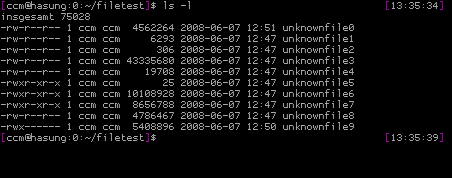

A sample output of the script:

$ ./check_keys.sh 2>/dev/null -------------------------------------------- checking host: a.b.com [email protected] backuppc@localhost some random key comment -------------------------------------------- checking host: b.b.com [...]

That’s all for now. Don’t blame me for doing it this way – I am only the messenger